Instruction Complexity Analyzer

An AI-powered tool that analyzes instructional text and predicts a “complexity score” to aid users with executive function challenges.

Table of Contents

- About The Project

- Key Features

- Technologies Used

- Getting Started

- The Machine Learning Pipeline

- Results

- Contributing

- Data Source & Acknowledgments

About The Project

For individuals with executive function challenges like ADHD or cognitive fog, initiating a task from a complex set of instructions can be a significant barrier. This project, the Instruction Complexity Analyzer, serves as a “cognitive ramp” to address this accessibility issue.

The tool analyzes any given instructional text and predicts a “complexity score.” This allows a user to gauge the mental energy required for a task before they begin, helping them to better manage their time, set expectations, and approach new tasks with more confidence.

Key Features

- Predicts Complexity: Provides a numerical score for any given instructional text.

- Rich Feature Set: Utilizes 7 linguistic and structural metrics for its predictions.

- Optimized Model: Built on a tuned Gradient Boosting Regressor, selected after comparing multiple algorithms.

- Interactive Demo: Includes a user-friendly web application built with Streamlit for live analysis.

Technologies Used

- Python: Primary programming language

- Pandas & NumPy: Data manipulation and analysis

- Scikit-learn: For training and evaluating regression models

- Textstat: For calculating readability scores

- Matplotlib & Seaborn: For data visualization

- Streamlit: For building and deploying the interactive demo

Getting Started

To get a local copy up and running, follow these steps.

Prerequisites

You will need Python 3.8+ and pip installed on your system.

Installation & Setup

- Clone the repository:

git clone [https://github.com/your-username/your-repo-name.git](https://github.com/your-username/your-repo-name.git) cd your-repo-name - Download the Dataset:

- The wikiHow dataset (

wikihow.pickle) is too large to be included in this repository. - Please download it from the source on Kaggle: wikiHow Raw Dataset.

- Place the downloaded

wikihow.picklefile into the root directory of this project.

- The wikiHow dataset (

- Install dependencies:

pip install -r requirements.txt - Run the Jupyter Notebook (Optional):

- To see the full data analysis, feature engineering, and model training process, you can now run the Jupyter Notebook included in this repository.

- Run the Streamlit Demo:

- To use the final, trained model, run the following command in your terminal:

streamlit run app.py

- To use the final, trained model, run the following command in your terminal:

The Machine Learning Pipeline

Our project followed a standard, rigorous machine learning workflow:

- Data Sourcing & Preparation: We used a dataset of wikiHow articles and created a working subset of 10,000 articles.

- Feature Engineering: We converted the raw text of each article into a rich numerical feature set of 10 linguistic and structural metrics.

- Model Training & Tuning: We trained and compared multiple regression models (Linear, Lasso, Ridge, Random Forest, Gradient Boosting). We then performed hyperparameter tuning on the top performers to find the optimal settings.

- Evaluation: We selected our final model based on its performance on a held-out test set, using Root Mean Squared Error (RMSE) as our primary metric.

Results

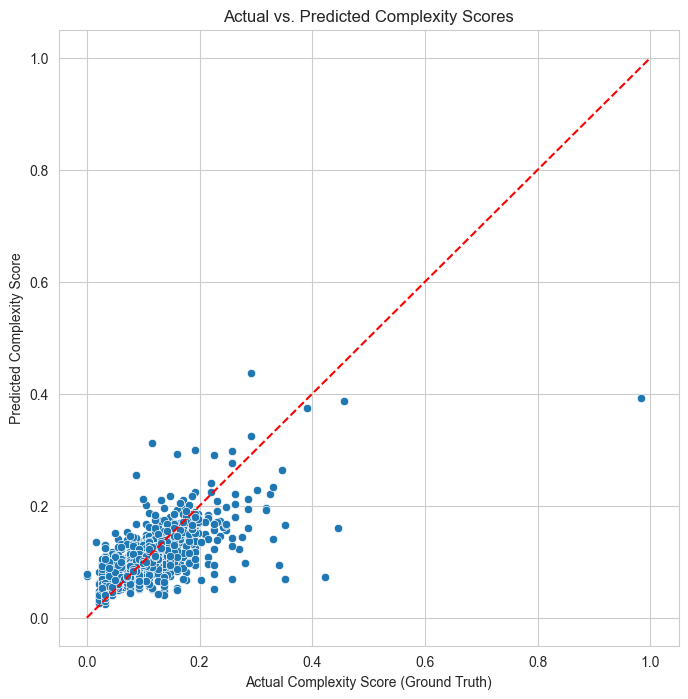

After comparing multiple models and performing hyperparameter tuning on an expanded dataset of 10,000 samples, our final Tuned Gradient Boosting Regressor was the clear winner. It achieved a Root Mean Squared Error (RMSE) of 0.0354 on the test set, indicating a very high degree of accuracy.

Data Visualizations

1. Exploratory Data Analysis

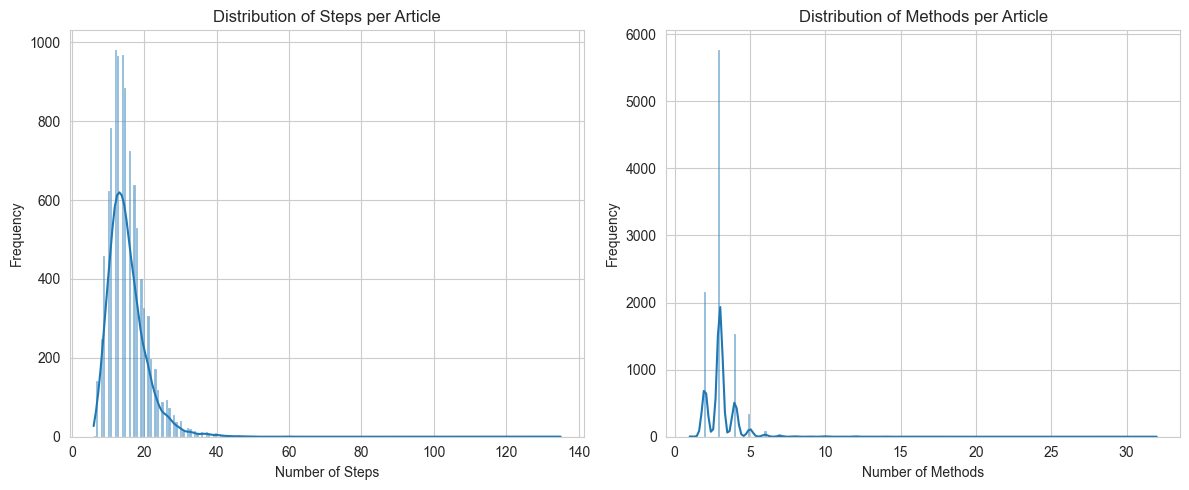

These histograms show the distribution of steps and methods in our 10,000-article dataset. We observed that most articles are moderately complex, with a long tail of more complex instructions, which is ideal for training.

2. Feature Importance

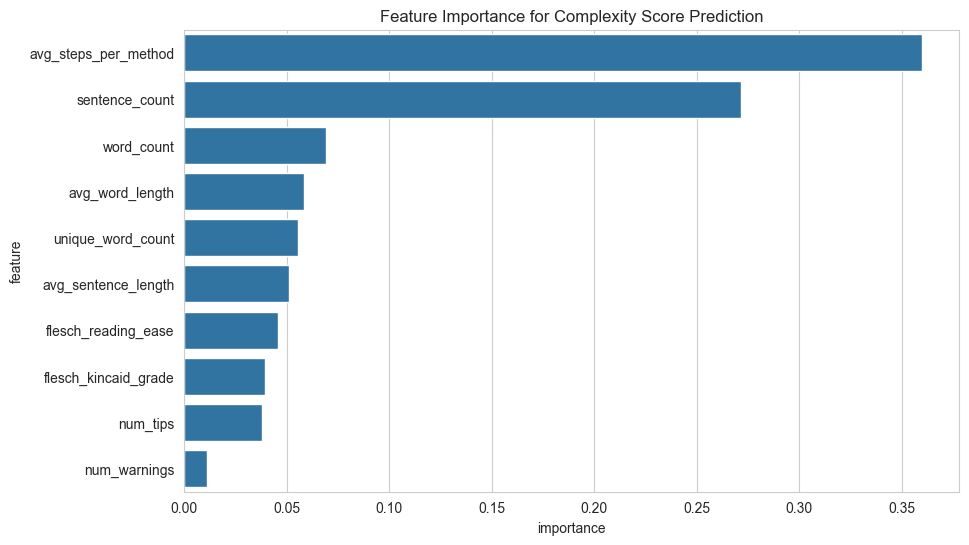

This plot reveals our final model’s strategy. It learned that the instruction’s structure (average steps per method) and its overall volume (sentence and word counts) are the most powerful predictors of complexity.

3. Model Performance: Actual vs. Predicted

This scatter plot visualizes our model’s high accuracy. The tight clustering of predictions around the red “perfect prediction” line confirms the model’s reliability.

Contributing

Contributions are what make the open-source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Data Source & Acknowledgments

- Primary Dataset: The wikiHow Raw Dataset from Kaggle was used as the source for all instructional articles.

- This project was developed as part of the AI4ALL Ignite Program.